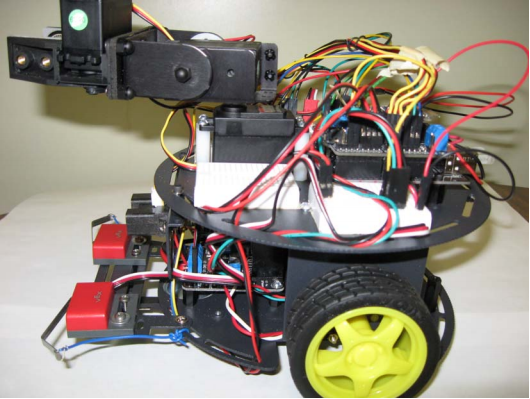

One and a half weeks until we were heading to the First Robotics World Championship in St. Louis and our vision targeting system needed some serious TLC. At our last regional we swapped out our old system which was running on board the CRio with a new vision system that I had written using the smart dashboard which allowed us to offload the vision system to the driver station. The new vision system was way better for a number of reasons but admittedly the performance of the vision system was about the same.

In addition to changing where the vision processing was done I also totally changed the algorithm to use higher level OpenCV functions as opposed to the old system which was a crude stride detector operating on raw pixels implemented by another mentor. For worlds there was some debate over which approach was better and frankly despite what many people may have thought we really had no idea how well or not well either approach actually worked.

I’ve come to notice a common pitfall when dealing the so called “real” systems people tend to make small changes and then watch it work once and think they have fixed or improved it. I understand why there is a ton of temptation to simply try your new idea and this isn’t wrong just sometimes we need to take a step back and do it properly. This was one of those times and it presented a great opportunity to teach the students about proper testing, ground truth and of course explain this pitfall.

So I headed to the warehouse where our field was setup and using a simply extension to the smart dashboard that I wrote I collected 1000 images of the vision targets at various orientations and angles. The vision target for reference:

You wouldn’t think that finding a giant glowing green rectangle would present much of a problem, I mean I wish all computer vision problems were this simple, but none the less there are some details which make it tricky. The net likes to obstruct the rectangle causing a break in it – this can be easily fixed with morphological operators but this can get you into trouble by joining the target stadium lights from above. TL;DR yeah it’s easy but still not trivial.

So what am I going to do with all these data it isn’t labelled - oh wait don’t I mentor a team of 40 high school students? commence image labelling sweat shop! Seriously though we had all hands on deck for this one, I made a simple procedure to follow and then everyone helped label the data.

Fun times! Check out the full blog post from KBotics for more pics:

http://kbotics.ca/2012/04/05/image-processing-party/

I took the labelled data and created a program to test the algorithms on – first run it was pretty clear my new algorithm found the target in ~850 of the images while the old approach found 3… After some parameter tweaking I was able to get my algorithm to detect 997 of the 1000 - notbad.jpg!

Here is a link to the github repo for our final vision system: github.com/pickle27/2809_vision2012.git

Next KBotics meeting I presented my findings and taught all the students about how our vision system works, I like to think they learned a thing or two ;)