I’ve spent a great deal of time this past month getting ARPool ready for our trip to London UK to be on Stephen Fry Gadget Man. So much has happened it is going to be tough for me to remember it all!

It begins by testing all the code I wrote after OCE which actually essentially worked on the first try a minor miracle! After confirming that ARPool 2.0 ran we spent the next week improving the calibration process. I added much better debugging visuals and several new functions including a really handy calibration test function which tests the mapping from image coordinates to projector coordinates by drawing test points on the table so you can confirm the calibration accuracy.

With calibration looking pretty good I moved onto the ARRecogntion Class. This class is the work horse of ARPool all the vision algorithms are in here. I started by cleaning the code and adding documentation – you heard right in ~4 years of the project no one has documented it… I made a nice comment block above each function clearly explaining its purpose, its inputs (in detail such as if the image is rectified or not etc.), outputs. There is also a debugging tips section.

I made many small improvements to ARRecognition and a few major ones – notably I re-did the motionDetection algorithm which detects when the shot is finished restarting the process. I also worked on the detectShot algorithm to fix a bug where another ball next to the cue ball would cause a shot to not be detected. The first major change was in the detectBalls function where I added code for detecting balls whose blobs are joined after background subtraction. The final algorithm here is quite slick, if the blob is bigger than a single ball a matchTemplate is ran to find the 2,3,4 etc. best balls in the image. A max search is performed on the output of matchTemplate and the location is recorded and then a black circle is drawn centered at the point before running another max search. This ensures the balls that are found are not overlapping at all. This change made a big difference in the performance of the system – especially since we could now be more relaxed on the background subtraction threshold since ball blobs joining is no longer an issue. I also added some code for the special case of finding the balls when they are set up for the break shot.

That explanation was kind of brutal and if you’re actually reading this your probably like code so here is the source!

/************************************************************************

/ segmentBallClusters

/

/ Input: blobs image and the contour to be segmented

/ numBalls - the number of balls the contour should be segmented into

/ centers - a vector of ball centers not an input but an output via reference

/

/ Return: the ball centers in the vector by reference

/

/ Purpose: Segment a blob into a number of balls, gets called by detectBallCenters

/ when a blob is the the area of nultiple balls, e.g. two balls are touching

/

/ Debugging Tips: Check the blobs image saved by detectBallCenters

/ watch what the erode is doing

/ imshow the "results" image

/

************************************************************************/

void ARRecognition::segmentBallClusters(cv::Mat &blobs, std::vector<cv::Point> &contour, int numBalls, std::vector<cv::Point2f> ¢ers)

{

// draw the blob onto the blobs image

std::vector<std::vector<cv::Point> > contours;

contours.push_back(contour);

cv::drawContours(blobs, contours, -1, cv::Scalar(255,255,255), CV_FILLED);

// get an ROI of the blob we need to segment

cv::Rect rect = cv::boundingRect(contour);

cv::Mat roi = blobs(rect).clone();

cv::cvtColor(roi,roi,CV_RGB2GRAY);

// template for the ball

cv::Mat templ = cv::Mat::zeros(m_ball_radius*2,m_ball_radius*2,CV_8U);

cv::circle(templ, cv::Point(m_ball_radius, m_ball_radius), m_ball_radius, cv::Scalar(255,255,255), CV_FILLED);

cv::Mat result;

cv::matchTemplate(roi,templ,result,CV_TM_CCOEFF_NORMED);

cv::Point offset(rect.x, rect.y);

if(numBalls != 15)

{

for(int i = 0; i < numBalls; i++)

{

// find the maximum

double minVal;

double maxVal;

cv::Point minLoc;

cv::Point maxLoc;

cv::minMaxLoc(result,&minVal,&maxVal,&minLoc,&maxLoc);

// add ball center at max loc

cv::Point center(maxLoc.x + offset.x + m_ball_radius, maxLoc.y + offset.y + m_ball_radius);

centers.push_back(center);

// remove this max by drawing a black circle

cv::circle(result,maxLoc,m_ball_radius,cv::Scalar(0,0,0),CV_FILLED);

//cv::imshow("result",result);

//cv::waitKey();

}

}

// numBalls == 15 -- Special case for the "break"

else

{

cv::erode(roi,roi,cv::Mat(),cv::Point(-1,1),(int)m_ball_radius/2);

cv::findContours(roi.clone(), contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

std::vector<cv::Point> polygon;

double precision = 1.0;

while(polygon.size() != 3)

{

//std::cout << "precision" << precision << " num polygon vertices" << polygon.size() << std::endl;

cv::approxPolyDP(contours[0], polygon,precision,true);

precision += 0.1;

}

// add offset to polygon points

for(int k = 0; k < 3; k++)

{

polygon[k] += offset;

}

cv::polylines(blobs,polygon,true,cv::Scalar(0,255,0),3);

// interpolate ball centers

centers.push_back(polygon[0]);

centers.push_back(polygon[0] - 0.25*(polygon[0] - polygon[1]) - 0.25*(polygon[0] - polygon[2]));

centers.push_back(polygon[0] + 0.7 * (polygon[1] - polygon[0]));

centers.push_back(polygon[0] + 0.5 * (polygon[1] - polygon[0]));

centers.push_back(polygon[0] + 0.3 * (polygon[1] - polygon[0]));

centers.push_back(polygon[1]);

centers.push_back(polygon[1] - 0.25*(polygon[1] - polygon[2]) - 0.25*(polygon[1] - polygon[0]));

centers.push_back(polygon[1] + 0.7 * (polygon[2] - polygon[1]));

centers.push_back(polygon[1] + 0.5 * (polygon[2] - polygon[1]));

centers.push_back(polygon[1] + 0.3 * (polygon[2] - polygon[1]));

centers.push_back(polygon[2]);

centers.push_back(polygon[2] - 0.25*(polygon[2] - polygon[1]) - 0.25*(polygon[2] - polygon[0]));

centers.push_back(polygon[2] + 0.7 * (polygon[0] - polygon[2]));

centers.push_back(polygon[2] + 0.5 * (polygon[0] - polygon[2]));

centers.push_back(polygon[2] + 0.3 * (polygon[0] - polygon[2]));

}

return;

}

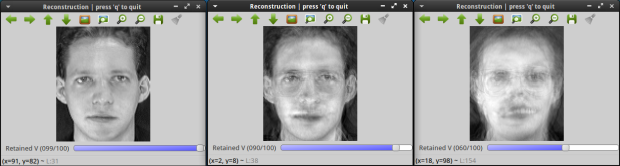

The biggest change I made was to re-do the ball identification system. The current one was not working great and was very messy code wise which I didn’t like. I wanted to make it cleaner, easier to maintain and I wanted it to use the OpenCV machine learning module. I gathered a bunch of training and test data and started playing around with the data. I found great success with cvBoost classifiers. The new system is a bit different as it employs different classifiers for different purposes. First a classifier picks the cue ball from all the other balls (1 vs all) and another does the same for the 8 ball. Another classifier classifies the remaining balls as either stripes or solids before finally 2 separate classifiers assign actual numbers. This is great because each level is a fail safe. Our primary concern is finding the cue ball properly, next is the 8 ball. The actual number is the least important but it is good to know if the ball is a stripe or a solid.

With ARRecognition in the best shape it’s ever been in I started investigating the graphics code and why our drawImage function was not working properly any more. I wasted the whole first afternoon just trying to find a solution worked out for me online – it was one of those things where I just didn’t feel like diving in and as a result I got no where. The next day though I had motivation again – I started the graphics code from scratch adding back one function at a time. This turned out to be a really good thing to do because now I understand the graphics code. I was also able to consolidate all the opengl code from about 4 different .cpp files into a single file, now all the drawing code was in one place brilliant! I added the same documentation as I had for ARRecognition – man this project is starting to look very nice!

All of this work has been very satisfying for me but it makes me a bit sad that no one truly appreciates all the vast improvements I have made except for me. The system looks the same when it is running but the code behind is so much more reliable and easy to work with. I’m sure it will pay off in London!