TensorKart: self-driving MarioKart with TensorFlow

This winter break, I decided to try and finish a project I started a few years ago: training an artificial neural network to play MarioKart 64. It had been a few years since I’d done any serious machine learning, and I wanted to try out some of the new hotness (aka TensorFlow) I’d been hearing about. The timing was right.

Project - use TensorFlow to train an agent that can play MarioKart 64.

Goal - I wanted to make the end-to-end process easy to understand and follow, since I find this is often missing from machine learning demos.

Finally, after playing way too much MarioKart and writing an emulator plugin in C, I managed to get some decent results.

Driving a new (untrained) section of the Royal Raceway:

Driving Luigi Raceway:

Getting to this point wasn’t easy and I’d like to share my process and what I learned along the way.

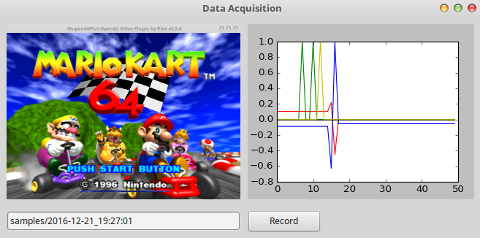

Training data

To create a training dataset, I wrote a program to take screenshots of my desktop synced with input from my Xbox controller. Then I ran an N64 emulator and positioned the window in the capture area. Using this program, I recorded a dataset about what my AI would see and what the appropriate action was.

This was the only part I finished back when I started this project. It was interesting to see the difference in my own coding style and tool choices from several years ago. My urge to update this code was strong, but if it ain’t broke don’t fix it. So other than a bit of clean up, I mostly left things as they were.

Model

I started by modifying the TensorFlow tutorial for a character recognizer using the MNIST dataset. I had to change the input and output layer sizes as well as the inner layers since my images were much larger than the 28x28 characters from MNIST. This was a bit tedious and I feel like TensorFlow could have been more helpful with these changes.

Later, I switched to use Nvidia’s Autopilot developed specifically for self-driving vehicles. This also simplified my data preparation code. Instead of converting to grayscale and flattening the image to a vector manually, this model took colour images directly.

Training

To train a TensorFlow model, you have to define a function for TensorFlow to optimize. In the MNIST tutorial, this function is the sum of incorrect classifications. Nvidia’s Autopilot uses the difference between the predicted and recorded steering angle. For my project, there are several important joystick outputs. The function I used was the euclidean distance between the predicted output vector and the recorded one.

One of TensorFlow’s best features is this explicit function definition. Higher level machine learning frameworks might abstract this, but TensorFlow forces the developer to think about what is happening and helps dispel the magic around machine learning.

Training was actually the easiest part of this project. TensorFlow has great documentation and there are plenty of tutorials and source code available.

Playing

With my model trained, I was ready to let it loose on MarioKart 64. But there was still a piece missing: how was I going to transfer output from my AI to the N64 emulator? The first thing I tried was to send input events using python-uinput. This almost worked and several joystick utilities believed my program was a proper joystick, but unfortunately not mupen64plus (the N64 emulator).

By this point, I was already digging into how mupen64plus-input-sdl worked (to see why it didn’t like my fake input), so it seemed like a better idea to write my own input plugin rather than trying to hack through multiple layers with fake joystick events.

Rabbit Hole - writing a mupen64plus input plugin

I hadn’t written a proper C program in quite a while, and I was excited to give it a go. I started by doing a curated copy paste of the original input driver. My goal was to get a bare bones plugin compiled and running inside the emulator. When the plugin is loaded, the emulator checks for several function definitions and errors if any are missing. This meant my plugin needed to have several empty functions defined. I thought this was interesting and pretty different from how I’m used to connecting things.

With my plugin working, I figured out how to set the controller output. I made Mario drive donuts forever.

The next step was to ask a local server what the input should be. This required making an http request in C, which turned out to be quite the task. People complain that distributed systems are hard - and they are! - but they forget that it used to be pretty hard to distribute them in the first place. It took me a while to figure out that my http request was missing an extra newline (and was thus malformed), which caused my python server to hang and never respond since it was still waiting for the request to finish. After a few more interesting mishaps, I finally finished consuming data and outputting it to the N64 emulator internally.

My completed input plugin is available on GitHub.

Playing Revisited

Now my AI could play! The first race was pretty disappointing - Mario drove straight into the wall and made no attempt to turn ![]() .

.

To debug, I added a manual override, allowing me to take control from the AI when needed. I observed the output while playing and stepped through the whole system again. I came up with 2 things to fix for the next iteration:

-

While re-inspecting my training data, I found that the screenshot was occasionally a picture of my desktop behind the emulator window. I didn’t bother looking into why this happened since I’d been down enough rabbit holes on this project already. My solution was simply to remove these bad samples from my data and move on.

-

I noticed that MarioKart is a very jerky game in that you typically don’t take corners smoothly. Instead, players usually make several sharp adjustments throughout a large turn. If you think about what the agent

seesat each iteration, this jerkiness could explain why Mario didn’t turn. Thanks to aliasing of the data, there would be images of Mario in the middle of a turn both with and without a joystick output indicating the turn. I suspect the model learned to never turn and that this actually resulted in the smallest error over the dataset. Training is still a dumb optimization problem and it will happily settle on this if the data leads it there.With this in mind I played more MarioKart to record new training data. I remember thinking to myself while trying to drive perfectly, “is this how parents feel when they’re driving with their children who are almost 16?”

With those 2 adjustments I got the results you saw at the beginning of this blog post. Hooray!

Final Thoughts

TensorFlow is super cool despite being a lower level abstraction than tools I used several years ago (scikit-learn and shogun). The speed it offers (thanks to cuDNN) is worth it and their gradient descent / optimizers seem really top notch. If I was doing another deep learning project, I probably wouldn’t use TensorFlow directly; I’d try keras since doing the math for layer sizes sucks (keras is built on TensorFlow though so it’s basically syntactic sugar).

In a couple of days over winter break, I was able to to train an AI to drive a virtual vehicle using the same technique Google uses for their self-driving cars. With approximately 20 minutes of training data, my AI was able to drive the majority of the simplest course, Luigi Raceway, and generalized to at least one section of an untrained racetrack. With more data, I bet we could build a complete AI for MarioKart 64.

Source code:

github.com/kevinhughes27/TensorKart

github.com/kevinhughes27/mupen64plus-input-bot

Update / Reception

This post went viral on the internet!

It started by topping hacker news:

It did pretty good on /r/programming as well. Then it got picked up by Google News, LinkedIn and all sorts of content sites. I personally got a kick out of it when Google suggested my own article to me 😂

Tensor Kart is still doing laps of the internet to this day thanks to various twitter bots.

Comments